For years, rumors have swirled about Apple's possible entry into the AR/VR market. While other companies have launched headsets, they have failed to inspire widespread consumer adoption.

Apple Vision Pro

The wait was finally over this week when Apple unveiled the company's first "space computer," the Apple Vision Pro, at WWDC23. This new technology from Apple is full of new technological innovations that set it apart from previous headphones.In this article, we'll take a high-level look at the Vision Pro headset and discuss the new silicon that powers the first spatial computer.

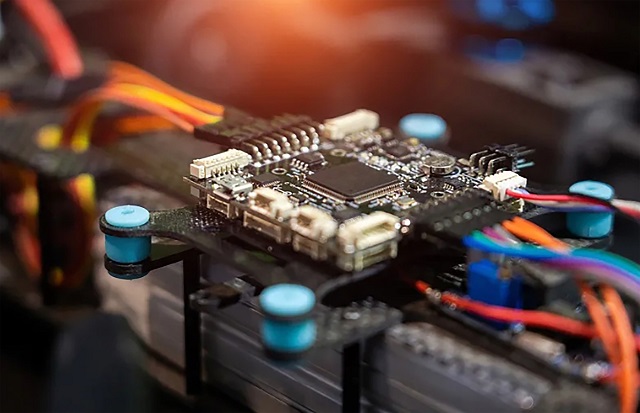

Vision Pro: High Level Overview

The Apple Vision Pro is a mixed reality headset, which means it can function as both a VR headset (fully immersive) and an AR headset (partially immersive). When it comes to the Apple Vision Pro, the device employs a myriad of sensors supported by complex hardware. To interpret the physical world around the user, the headset is equipped with an array of sensors, including two main front cameras, four down facing cameras, two side cameras, a true depth (3D) camera and a LiDAR scanner. To interact with the user's eyes, the product features a series of infrared cameras and LED illuminators inside the headset.

Outward facing sensor on Vision Pro

Vision Pro introduces two processors to handle this sensing and support Vision OS: the M2 and the brand new R1. For visuals, each eye has a custom micro-OLED display, giving each eye a higher resolution than a 4K TV.

Unlike competitors like Microsoft's Holo Lens or Meta Quest, which include the hardware entirely within the headset, Apple's Vision Pro has a separate battery from the headset. The device's battery is placed in the user's pocket, and it connects to the headset via a USB cable. By separating the battery from the headset, Apple keeps the headset lighter and prevents it from overheating—both common problems with AR/VR headsets.

R1 chip

One of the biggest hurdles facing AR/VR headsets so far is nausea due to the lag between processing data and visual data. Apple claims to have fixed this issue in the Apple Vision Pro with its new in-house R1 chip.

Apple Vision Pro introduces the R1 chip for the first time

Apple hasn't shared many details about the R1 chip, but it has been confirmed to be Apple's custom silicon solution designed for sensor processing and fusion. According to Apple, the R1 simultaneously processes input from 12 cameras, five sensors, and six microphones with extremely low latency to eliminate the lag between sensory and empirical data. The R1 is also said to be able to transmit new images to the user's eyes in less than 12 milliseconds — eight times faster than the human eye can blink.

The R1 works alongside Apple's M2 SoC in the Apple Vision Pro, with the M2 doing the heavy lifting for the non-sensor computing. The M2 can achieve up to 15.8 TOPS of AI computing and achieve a memory bandwidth of 100 GB/s.

AR/VR has long been touted as the potential next wave in computing, but so far no company has produced a product to make it a reality. Whether Apple's Vision Pro will be the headset that changes the trajectory of AR headsets remains to be seen!

For more information, please click perceive-ic.com.

RELATED CONTENT